A cooperating process is one that can affect or be affected by other processes executing in the system. Cooperating processes can either directly share a logical address space (that is, both code and data) or be allowed to share data only through files or messages. The former case is achieved through the use of threads, discussed in Chapter 4. Concurrent access to shared data may result in data inconsistency, however. In this chapter, we discuss various mechanisms to ensure the orderly execution of cooperating processes that share a logical address space, so that data consistency is maintained.

Background

- In Chapter 3, we developed a model of a system consisting of cooperating sequential processes or threads, all running asynchronously and possibly sharing data.

- Specifically, in Section 3.4.1, we described how a bounded buffer could be used to enable processes to share memory.

- Let's return to our consideration of the bounded buffer.

- As we pointed out, our original solution allowed at most BUFFER_SIZE - 1 items in the buffer at the same time.

- Suppose we want to modify the algorithm to remedy this deficiency.

- One possibility is to add an integer variable counter, initialized to 0.

- Counter is incremented every time we add a new item to the buffer and is decremented every time we remove one item from the buffer.

- We would arrive at incorrect state because we allowed both processes to manipulate the variable counter concurrently.

- several processes access and manipulate the same data concurrently and the outcome of the execution depends on the particular order in which the access takes place, is called a race condition

The Critical Section Problem

- The important feature of the system is that, when one process is executing in its critical section, no other process is to be allowed to execute in its critical section.

- No two processes are executing in their critical sections at the time

- The critical-section problem is to design a protocol that the processes can use to cooperate.

- Entry section

- Each process must request permission to enter its critical section.

- Exit section

- follow the entry section, used to leave critical section.

- Critical section

- Code in it should not be interleaved or overlapped

- Remainder section

- remaining code

- A solution to the critical-section problem must satisfy the following three requirements:

- Mutual exclusion.

- If process Pi is executing in its critical section, then no other processes can be executing in their critical sections.

- Progress. (Freedom from deadlock)

- If no process is executing in its critical section and some processes wish to enter their critical sections, then only those processes that are not executing in their remainder sections can participate in deciding which will enter its critical section next, and this selection cannot be postponed indefinitely.

- Bounded waiting (Freedom from starvation)

- There exists a bound, or limit, on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted.

- We assume that each process is executing at a nonzero speed. Assume concerning the relative of the n processes, at a given point in time, many kernel-mode processes may be active in the operating system.

- As a result, the code implementing an operating system (kernel code) is subject to several possible race conditions.

- Consider as an example a kernel data structure that maintains a list of all open files in the system.

- This list must be modified when a new file is opened or closed (adding the file to the list or removing it from the list).

- If two processes were to open files simultaneously, the separate updates to this list could result in a race condition

- Other kernel data structures that are prone to possible race conditions include structures for maintaining memory allocation, for maintaining process lists, and for interrupt handling.

- It is up to kernel developers to ensure that the operating system is free from such race conditions.

- Two general approaches are used to handle critical sections in operating systems:

- preemptive kernels

- Allows a process to be preempted while it is running in kernel mode

- Must be carefully designed to ensure that shared kernel data are free from race conditions

- Especially difficult to design for SMP architectures, since in these environments it is possible for two kernel-mode processes to run simultaneously on different processors

- More suitable for real-time programming, as it will allow a real-time process to preempt a process currently running in the kernel.

- More responsive, since there is less risk that a kernel-mode process will run for an arbitrarily long period before

relinquishing (give up) the processor to waiting processes - nonpreemptive kernels

- Does not allow a process running in kernel mode to be preempt

- Obviously, it is essentially free from race conditions on kernel data structures, as only one process is active in the kernel at a time.

- A kernel-mode process will run until it exits kernel mode, blocks, or voluntarily yields control of the CPU.

Peterson's Solution

- Two process solution

- Assume that the LOAD and STORE instructions are atomic; that is, cannot be interrupted.

- The two processes share two variables:

- int victim;

- Boolean flag[2]

- The variable victim indicates whose turn it is to wait.

- The flag array is used to indicate if a process is ready to enter the critical section. flag[i] = true implies that process Pi is ready!

Synchronization Hardware

- Peterson's are not guaranteed to work on modern computer architectures.

- Instead, we can generally state that any solution to the critical-section problem requires a simple tool-a lock.

- Race conditions are prevented by requiring that critical regions be protected by locks.

- That is, a process must acquire a lock before entering a critical section; it releases the lock when it exits the critical section.

- All these solutions are based on the premise of locking; however, as we shall see, the designs of such locks can be quite sophisticated.

- Start by presenting some simple hardware instructions that are available on many systems and showing how they can be used effectively in solving the critical-section problem.

- Hardware features can make any programming task easier and improve system efficiency.

- The critical-section problem could be solved simply in a uniprocessor environment if we could prevent interrupts from occurring while a shared variable was being modified.

- In this manner, we could be sure that the current sequence of instructions would be allowed to execute in order without preemption.

- No other instructions would be run, so no unexpected modifications could be made to the shared variable.

- Often the approach taken by nonpreemptive kernels.

- Unfortunately, this solution is not as feasible in a multiprocessor environment.

- Disabling interrupts on a multiprocessor can be time consuming, as the message is passed to all the processors. This message passing delays entry into each critical section, and system efficiency decreases.

- Also consider the effect on a system's clock if the clock is kept updated by interrupts.

- Many modern computer systems therefore provide special hardware instructions that allow us either to test and modify the content of a word or to swap the contents of two words atomically, that is, as one uninterruptible unit.

- We can use these special instructions to solve the critical-section problem in a relatively simple manner.

- Rather than discussing one specific instruction for one specific machine, we abstract the main concepts behind these types of instructions by describing the TestAndSet () and Swap() instructions.

- Support more than two processes

Semaphore (信號機)

- The hardware-based solutions to the critical-section problem presented in Section 6.4 are complicated for application programmers to use.

- To overcome this difficulty, we can use a synchronization tool called a semaphore.

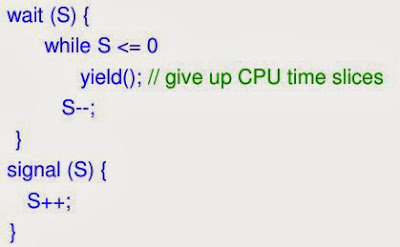

- A semaphore S is an integer variable accessed only through two standard atomic operations: wait () and signal ().

- wait () operation was originally termed P

- signal() was originally called V

- All modifications to the integer value of the semaphore in the wait () and signal() operations must be executed indivisibly.

- When one process modifies the semaphore value, no other process can simultaneously modify that same semaphore value.

- In addition, in the case of wait (S), the testing of the integer value of S (S <= 0), as well as its possible modification (S--), must be executed without interruption.

- Operating systems often distinguish between counting and binary semaphores.

- counting semaphore

- value can range over an unrestricted domain

- can be used to control access to a given resource consisting of a finite number of instances

- The semaphore is initialized to the number of resources available.

- Each process that wishes to use a resource performs a wait() operation on the semaphore (thereby decrementing the

count). - When a process releases a resource, it performs a signal() operation (incrementing the count).

- When the count for the semaphore goes to 0, all resources are being used.

- After that, processes that wish to use a resource will block until the count becomes greater than 0.

- binary semaphore

- value can range only between 0 and 1

- On some systems are known as mutex locks, as they are locks that provide mutual exclusion.

- Can be used to deal with the critical-section problem for mulltiple processes.

- n processes share a semaphore, mutex, initialized to 1.

- We can also use semaphores to solve various synchronization problems.

- For example, consider two concurrently running processes: P1 with a statement S1 and P2 with a statement S2.

- Suppose we require that S2 be executed only after S1 has completed.

- We can implement this scheme readily by letting P1 and P1 share a common semaphore synch, initialized to 0, and by inserting the statement below in P1

- and statement below in P2

- Because synch is initialized to 0, P2 will execute S2 only after P1 has invoked signal (synch), which is after statement S1 has been executed.

Implementation

- The main disadvantage of the semaphore definition given here is that it requires busy waiting.

- While a process is in its critical section, any other process that tries to enter its critical section must loop continuously in the entry code.

- This continual looping is clearly a problem in a real multi-programming system, where a single CPU is shared among many processes.

- Busy waiting wastes CPU cycles that some other process might be able to use productively.

- This type of semaphore is also called a spinlock because the process "spins" while waiting for the lock.

- Spinlocks do have an advantage in that no context switch is required when a process must wait on a lock, and a context switch may take considerable time.

- Thus, when locks are expected to be held for short times, spinlocks are useful; they are often employed on multiprocessor systems where one thread can "spin" on one processor while another thread performs its critical section on another processor.)

- To overcome the need for busy waiting, we can modify the definition of the wait() and signal() semaphore operations.

- When a process executes the wait () operation and finds that the semaphore value is not positive, it must wait.

- However, rather than engaging in busy waiting, the process can block itself.

- The block operation places a process into a waiting queue associated with the semaphore, and the state of the process is switched to the waiting state.

- Then control is transferred to the CPU scheduler, which selects another process to execute.

- A process that is blocked, waiting on a semaphore S, should be restarted when some other process executes a signal() operation.

- The process is restarted by a wakeup () operation, which changes the process from the waiting state to the ready state.

- The process is then placed in the ready queue. (The CPU may or may not be switched from the running process to the newly ready process, depending on the CPU-scheduling algorithm.)

- The block() operation suspends the process that invokes it.

- The wakeup(P)operation resumes the execution of a blocked process P.

- These two operations are provided by the operating system as basic system calls.

- Note that in this implementation, semaphore values may be negative, although semaphore values are never negative under the classical definition of semaphores with busy waiting.

- If a semaphore value is negative, its magnitude is the number of processes waiting on that semaphore.

- This fact results from switching the order of the decrement and the test in the implementation of the wait () operation.

- The list of waiting processes can be easily implemented by a link field in each process control block (PCB).

- Each semaphore contains an integer value and a pointer to a list of PCBs.

- One way to add and remove processes from the list so as to ensure bounded waiting is to use a FIFO queue, where the semaphore contains both head and tail pointers to the queue.

- In general the list can use any queueing strategy.

- Correct usage of semaphores does not depend on a particular queueing strategy for the semaphore lists.

- It is critical that semaphores be executed atomically.

- no two processes can execute wait() and signal() operations on the same semaphore at the same time.

- in a single-processor environment (only one CPU exists), we can solve it by simply inhibiting interrupts during the time the wait() and signal() operations are executing.

- once interrupts are inhibited, instructions from different processes cannot be interleaved.

- Only the currently running process executes until interrupts are reenabled and the scheduler can regain control.

- In a multiprocessor environment, interrupts must be disabled on every processor; otherwise, instructions from different processes (running on different processors) may be interleaved in some arbitrary way.

- Disabling interrupts on every processor can be a difficult task and furthermore can seriously diminish performance.

- Therefore, SMP systems must provide alternative locking techniques-such as spinlocks-to ensure that wait() and signal() are performed atomically.

- It is important to admit that we have not completely eliminated busy waiting with this definition of the wait () and signal () operations.

- Rather, we have moved busy waiting from the entry section to the critical sections of application programs.

- Furthermore, we have limited busy waiting to the critical sections of the wait () and signal () opera times, and these sections are short (if properly coded, they should be no more than about ten instructions).

- Thus, the critical section is almost never occupied, and busy waiting occurs rarely, and then for only a short time.

- An entirely different situation exists with application programs whose critical sections may be long (minutes or even hours) or may almost always be occupied.

- In such cases busy waiting is extremely inefficient.

Deadlock and Starvation

- The implementation of a semaphore with a waiting queue may result in a situation where two or more processes are waiting indefinitely for an event that can be caused only by one of the waiting processes.

- The event in question is the execution of a signal() operation

- When such a state is reached, these processes are said to be deadlock.

- To illustrate this, we consider a system consisting of two processes, P0 and P1, each accessing two semaphores, S and Q, set to the value 1:

- Starvation – indefinite blocking. A process may never be removed from the semaphore queue in which it is suspended

- Priority Inversion - Scheduling problem when lower-priority process holds a lock needed by higher-priority process

- When a higher-priority process needs to read or modify kernel data that are currently being accessed by a lower-priority process-or a chain of lower-priority processes.

- Since kernel data are typically protected with a lock, the higher-priority process will have to wait for a lower-priority one to finish with the resource.

- The situation becomes more complicated if the lower-priority process is preempted in favor of another process with a higher priority.

- As an example, assume we have three processes, L, M, and H, whose priorities follow the order L < M < H.

- Assume that process H requires resource R, which is currently being accessed by process L.

- Ordinarily, process H would wait for L to finish using resource R.

- However, now suppose that process M becomes runnable, thereby preempting process L.

- Indirectly, a process with a lower priority-process M-has affected how long process H must wait for L to relinquish resource R.

- This problem is known as priority inversion

- It occurs only in systems with more than two priorities, so one solution is to have only two priorities.

- That is insufficient for most general-purpose operating systems, however.

- Typically these systems solve the problem by implementing a priority-inheritance.

- According to this protocol, all processes that are accessing resources needed by a higher-priority process inherit the higher priority until they are finished with the resources in question.

- When they are finished, their priorities revert to their original values.

- A priority-inheritance protocol would allow process L to temporarily inherit the priority of process H, thereby preventing process M from preempting its execution.

- When process L had finished using resource R, it would relinquish its inherited priority from H and assume its original priority.

- Because resource R would now be available, process H-not M-would run next.

Classical Problems of Synchronization

Bounded-Buffer Problem- N buffers, each can hold one item

- Semaphore full initialized to the value 0

- Semaphore empty initialized to the value N.

-

efficient wait (no busy loop) && atomotic

- N buffers, each can hold one item

- Semaphore mutex initialized to the value 1

- Semaphore full initialized to the value 0

- Semaphore empty initialized to the value N.

Readers-Writers Problem

- A data set is shared among a number of concurrent processes

- Readers – only read the data set; they do not perform any updates

- Writers – can both read and write

- Problem – allow multiple readers to read at the same time. Only one single writer can access the shared data at the same time

- Shared Data

- Data set

- Semaphore mutex initialized to 1

- Semaphore wrt initialized to 1

- Integer readcount initialized to 0

Problems with Semaphores

- Used for 2 independent purposes

- Mutual exclusion

- Condition Synchronization

- Hard to get right

- signal (mutex) .... wait (mutex)

- wait (mutex) ... wait (mutex)

- Omitting of wait (mutex) or signal (mutex) (or both)

- Small mistake easily leads to deadlock / livelock

- Would it be nice to have?

- Separation of mutual exclusion and condition synchronization

- Automatic wait and signal p, li { white-space: pre-wrap; }

Semaphore 可以用來保護兩個或多個關鍵程式區塊,這些關鍵程式區塊段不能被同時呼叫使用。在進入一個關鍵程式區塊之前,執行緖必須獲取一個Semaphore。如果關鍵程式區塊中沒有任何執行緖,那麼執行緖會立即進入該程式區中的那個部分。一旦該關鍵程式區塊完成了,那麼該執行緖必須釋放Semaphore 。其它想進入該關鍵程式區塊的執行緖必須等待直到第一個執行緖釋放信號量。為了完成這個過程,需要創建一個Semaphore,然後將Acquire Semaphore VI以及Release Semaphore VI分別放置在每個關鍵程式區塊的首末端。確認這些Semaphore VI引用的是初始創建的Semaphore。

Reference:

Operating System Concepts 8th, by Silberschatz, Galvin, Gagne

Wikipedia

沒有留言:

張貼留言